May 5th - May 11th

Finished my first paper in the paper implementation project. Was super insightful and fun to work through all of the math and ideas manually. I implemented things from scratch and tested things out without looking at existing implementations and without LLM help. The paper is quite confusing however, and I feel like because I already understood how a lot of the concepts work because of Karpathy's youtube series, it was made a lot easier. Still though, a rate of one paper per week isn't great, but hopefully as I get more familiar this rate will increase.

Project

I finished up my implementation, reading, flash cards and questions for "Learning Representations by Back-propagating Errors". I had kind of an interesting moment during it when I first implemented the algorithm and I hadn't read through all their results yet. I skimmed down for examples they tested their implementation on in the paper and came across the two bit adder problem. Training my neural net on this with a small amount of nodes per layer kept getting almost all of it correct. Frustrated I just bumped up the node per layer count in my implementation and it worked.

Later when I read through the paper I found they had the same problem with the two bit adder getting stuck and that their proposed solution was exactly the same thing that I did. This kind of made me laugh but it was cool to discover that for myself.

In my old syllabus I had myself going through a bunch of optimizations like ReLU, Adam etc... but as I was going through it I realized that I didn't like the ordering of my papers. The Glorot paper had too many references to techniques and problems that were from between it's publishing date in 2011 and the date of the last paper I read. Therefore I reworked my syllabus ordering here to be a little bit more chronological. It's not strictly chronological but I'm hoping that if I go through LeNet before some of these other optimization techniques I'll get more of an understanding of why these techniques are needed in the first place. Also I'll try to make my implementation of LeNet a bit cleaner so I can extend it with different optimizers and activation functions and all.

Math Academy

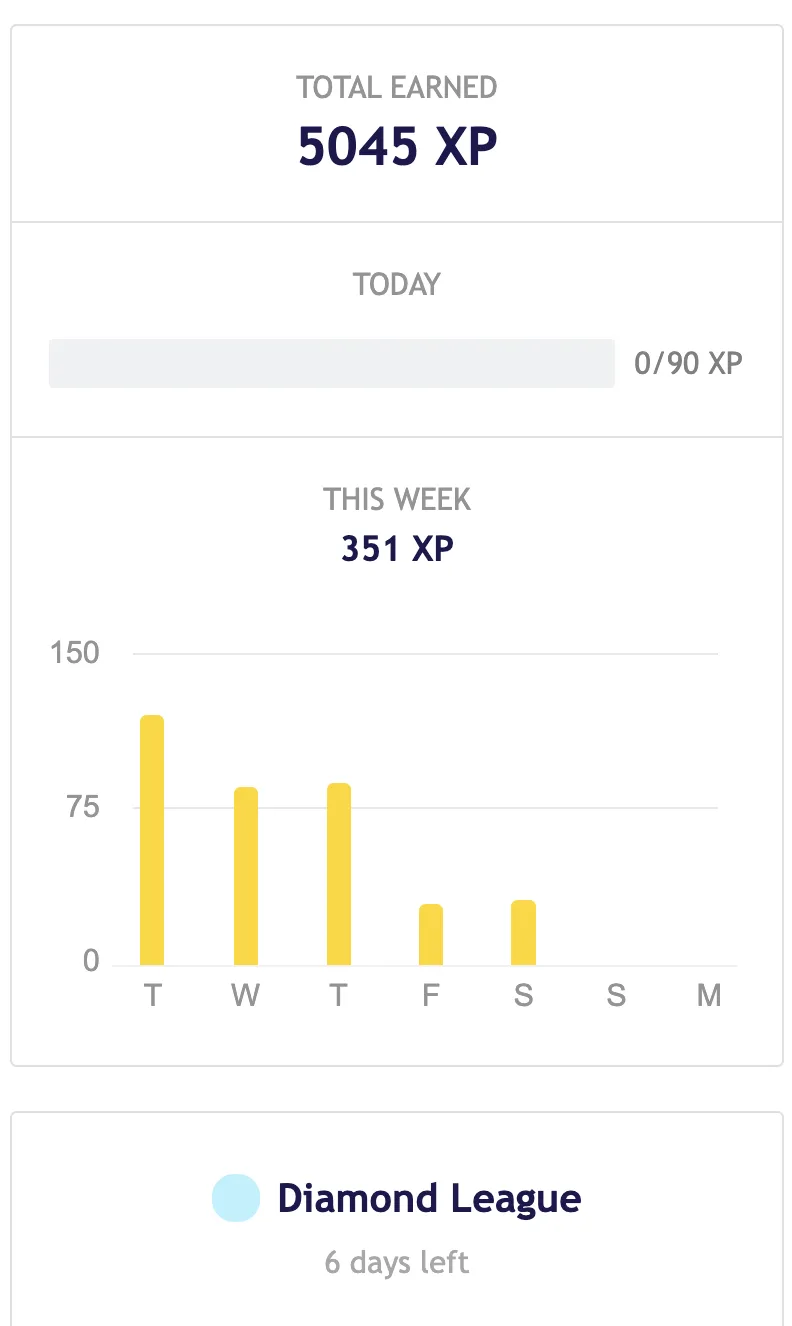

Still in diamond league, making good progress still! Did around 400 XP this week.

ML Learning

Finished Andrew Ng's second course and getting started on his third one!